10 practical steps to efficient web data harvesting

Contents of article:

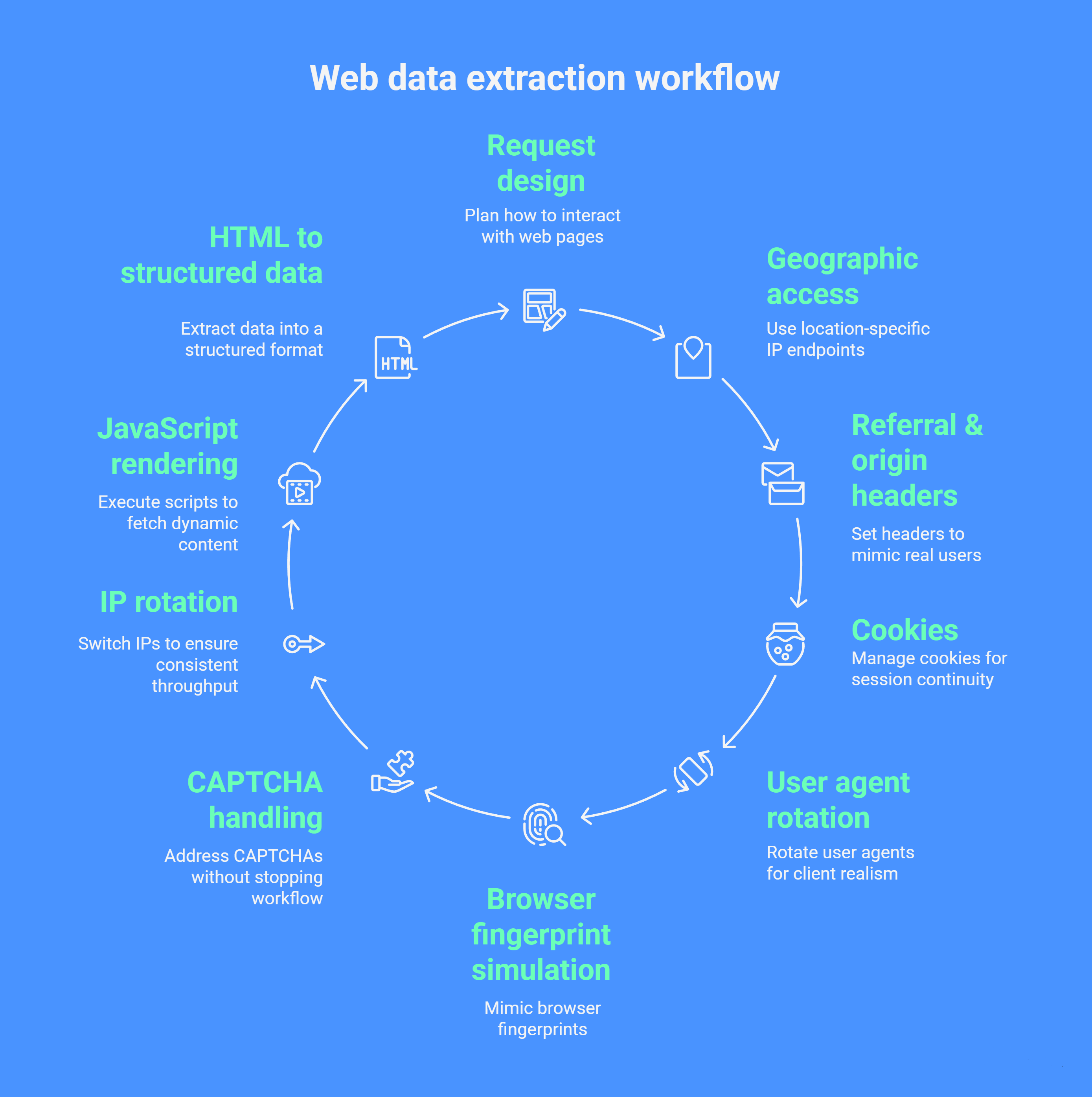

- Request design

- Geographic access

- Referral & origin headers

- Cookie & session management

- User agent rotation

- Browser fingerprint simulation

- CAPTCHA handling

- IP rotation

- JavaScript rendering

- HTML to structured data

Designing a comprehensive framework for web data extraction in 2026 requires a structured workflow. This article outlines a 10-step process that can be used with the best services to scale data gathering, such as Dexodata. By integrating these practices with solutions that let you buy residential and mobile proxies, teams can achieve efficient geo-targeted scraping for downstream analytics, BI, or AI pipelines.

-

Request design

A request design lays groundwork for web data gathering at scale by isolating request logic from parsing and extraction layers. This step is about how your program interacts with web pages. You can make simple HTTP requests (like fetching HTML) or use a headless browser to interact with JavaScript-heavy sites:

-

- Use HTTP clients for static content (Python “Requests”, Node.js “Axios”).

- Use headless browsers for dynamic sites (Playwright, Puppeteer).

-

-

Geographic access

Some websites show content based on your IP. Location-specific IP endpoints enable geo-targeted scraping for different regions, like Europe, USA, Russia, or specific cities. It can be used to compare prices and product availability, or perform comparative analytics.

For monitoring purposes:

-

- Log location metrics to answer whether different geos yield different content versions.

- Maintain metadata about resolved IP geolocation for each request.

-

-

Referral & origin headers

HTTP headers like “Referer”, “Origin”, and “Accept-Language” tell the website where the request came from and the browser language. Properly setting these headers makes your requests look more like real users. Effective header management improves the consistency and reliability of rotating proxies with high uptime when performing large-scale operations.

Use real-browser header templates and monitor headers that consistently trigger anomalies (404, CAPTCHA, redirect).

-

Cookie & session management

Cookies store session information like login tokens, user preferences, or consent flags. Without cookie management, repeated requests may fail or return incomplete data. Cookies play a critical role in session continuity, authentication, and content gating.

-

- Use per-session cookie jars to save and replay cookies per session.

- Separate cookies by task to avoid conflicts.

-

-

User agent rotation

The “User-Agent” (UA) string identifies the device and browser. It influences how servers classify your client. When paired with rotating proxies with high uptime, UA management significantly increases resilience during geo-targeted scraping.

-

- Maintain a pool of up-to-date browser UAs (desktop, mobile).

- Combine UA rotation with other identity vectors (IP geolocation, viewport size, timezone) to strengthen client realism.

-

-

Browser fingerprint simulation

Websites sometimes use browser fingerprints (screen resolution, fonts, WebGL features) to detect automated tools. Mimicking fingerprints means making requests appear consistent with real browsers.

You can check the browser's attributes for additional insights for your scrapers. -

CAPTCHA handling

CAPTCHAs prevent automated access. When sites deploy interactive challenges, you need to handle them without stopping the workflow. CAPTCHA is handled best when it's not triggered: their solving is one of the biggest challenges for web data gathering at scale, even with AI tools. When it's inevitable, techniques include:

-

- Automated solvers.

- Human-in-the-loop verification.

- Alternative API endpoints when possible.

-

-

IP rotation

If a request fails, your system should retry automatically and, if needed, switch to another IP. This ensures consistent throughput. These practices underpin rotating proxies with high uptime and cumulative throughput for large workloads.

-

- Use exponential backoff + jitter for retries to avoid retry storms.

- Maintain metrics per IP / endpoint: success rate, latency, error types.

- Rotate IPs automatically when failure thresholds are met.

-

-

JavaScript rendering

Modern websites increasingly rely on client-side logic to fetch and display content. If your system only collects raw HTML without executing scripts, you’ll often miss most of the actual data. The solutions may include browser-based or no-browser tools:

-

- For simple APIs: Some pages fetch the data from background APIs. They can be intercepted to reuse endpoint URLs directly in your workflow.

- For full UIs: Use tools that emulate full browser behavior and execute JavaScript like Playwright or Puppeteer.

-

-

HTML to structured data

Once content is fetched, you need to extract data into a structured format (JSON, CSV, database) for analytics. Extraction is where raw content becomes usable data.

To ensure stable throughput and fault tolerance during large-scale scraping, IP rotation should be governed by explicit retry and health-management rules:-

- Use CSS / XPath selectors or semantic parsers.

- Map fields into typed records (date, price, location, identifier) and validate via schema.

- Monitor extraction failures, duplicates, missing fields; alert on schema drift.

With Dexodata, you can buy residential and mobile proxies and combine them with geo-aware access, fingerprint management, and other techniques to build reliable, scalable pipelines. We offer millions whitelisted real-peer IPs from more than 100 countries, including Germany, France, UK, and Russia. New users can request a free trial and test proxies for free.

-